Pointers

To Begin

This is the journey of how zink-wip went from 0 fps in RPCS3 to a bit more than that. Quite a bit more, in fact, if you’re using RADV.

As all new app tests begin, this one started with firing up the app. Since there’s no homebrew games available (that I could find), I decided to pick something that I owned and was familiar with. Namely a demo of Bioshock.

It started up nicely enough:

But then I started a game and things got rough:

Yikes.

Another Overly Technical Post

One of the fundamentals of a graphics driver is that the GPU should be handling as much work as possible. This means that, for example, any time an application is using a Pixel Buffer Object (PBO), the GPU should be used for uploading and downloading the pixel buffer.

Why are you suddenly mentioning PBOs, you might be asking.

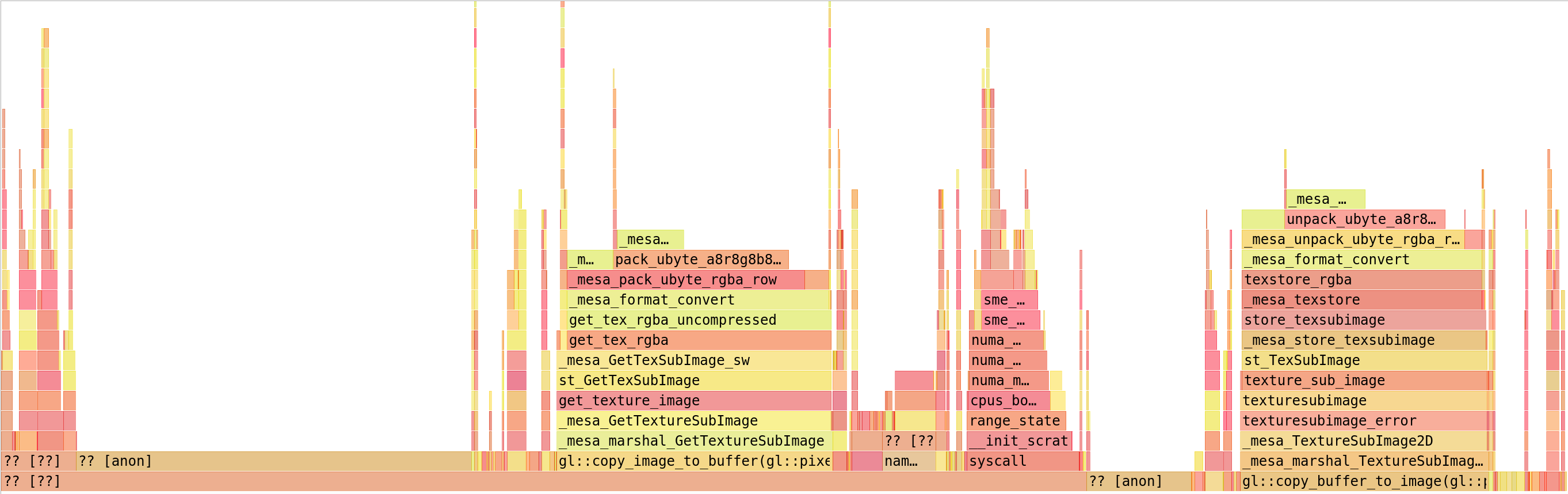

Well, let’s check out what’s going on using a perf flamegraph:

The driver in this case is hitting a software path for copying pixels to and from a PBO, effectively doing full-frame memcpy operations multiple times each frame. This is on the CPU, which is obviously not great for performance. As above, ideally this should be moved to the GPU.

Gallium provides a pipe cap for this: PIPE_CAP_PREFER_BLIT_BASED_TEXTURE_TRANSFER

Zink doesn’t use this in master right now, which naturally led me down the path of enabling it.

There were problems.

Lots of problems.

The first problem was that suddenly I had an infinite number of failing unit tests. Confusing for sure. Some intensive debugging led me to this block of code in zink which is used for directly mapping a rectangular region of image resource memory:

VkResult result = vkMapMemory(screen->dev, res->mem, res->offset, res->size, 0, &ptr);

if (result != VK_SUCCESS)

return NULL;

VkImageSubresource isr = {

res->aspect,

level,

0

};

VkSubresourceLayout srl;

vkGetImageSubresourceLayout(screen->dev, res->image, &isr, &srl);

ptr = ((uint8_t *)ptr) + box->z * srl.depthPitch +

box->y * srl.rowPitch +

box->x;

Suspicious. box in this case represents the region to be mapped, yet members of VkSubresourceLayout like offset aren’t being applied to handle the level that’s intended to be loaded, nor is this taking into account the bits-per-pixel of the image. In fact, this is always assuming that each x coordinate unit equals a single byte.

The fully corrected version is more like this:

VkResult result = vkMapMemory(screen->dev, res->mem, res->offset, res->size, 0, &ptr);

if (result != VK_SUCCESS)

return NULL;

VkImageSubresource isr = {

res->aspect,

level,

0

};

VkSubresourceLayout srl;

vkGetImageSubresourceLayout(screen->dev, res->image, &isr, &srl);

const struct util_format_description *desc = util_format_description(res->base.format);

unsigned offset = srl.offset +

box->z * srl.depthPitch +

(box->y / desc->block.height) * srl.rowPitch +

(box->x / desc->block.width) * (desc->block.bits / 8);

ptr = ((uint8_t *)ptr) + offset;

It turns out that no unit test had previously passed a nonzero x coordinate for a mapping region or tried to map a nonzero level, so this was never exposed as being broken.

Imagine that.